Person fit statistics to identify irrational response patterns for multiple-choice tests in learning evaluation

DOI:

https://doi.org/10.47750/pegegog.12.04.05Keywords:

Person Fit, Irrational Response Patterns, Multiple Choice TestsAbstract

This study aims to analyze and describe the response patterns of school exam participants based on the person fit method. This research is a quantitative study with a focus on research on social science elementary school examinations as many as 15 multiple choice items and 137 participant answer sheets. Data collection techniques were carried out with documentation by collecting the participants' exam results and then being scored based on the answer key. The data analysis technique used is statistical person fit analysis with the Rasch Model. Data were analyzed using the R Program with the package latent trait model (ltm). The results showed that as many as 93 or about 67.9% of examinees detected fit or were categorized as having rational response patterns and as many as 44 examinees or around 32.1% of examinees were detected as not fit or categorized as having irrational response patterns. Based on the results of the study, it was concluded that most elementary school exam participants had a response pattern that was fit (had a rational response pattern).

Downloads

References

Armstrong, R. D., & Stoumbos, Z. G. (2007). On the performance of the lz person fit statistic. Practical Assessment Research & Evaluation, 12(16), 1–16.

Avşar, A. Ş. (2019). Comparison of Person-Fit Statistics for Polytomous Items in Different Test Conditions. Eğitimde ve Psikolojide Ölçme ve Değerlendirme Dergisi, 10(4), 348–364. https://doi.org/10.21031-epod.525647-830847

Bailey, K. M., & Curtis, A. (2015). Learning about language assessment: Dilemmas, decisions, and directions (2nd ed.). Boston, MA: National Geographic Learning.

Baker, F. B. (2001). The basics of item response theory. Clearinghouse on Assessment and Evaluation.

Baker, F. B., & Kim, S. H. (2017). The basics of item response theory using R. Springer.

Çiftçi, S. (2019). Metaphors on open-ended question and multiple-choice tests produced by pre-service classroom teachers. International Electronic Journal of Elementary Education, 11(4), 361–369. https://doi.org/10.26822/iejee.2019450794

Cui, Y., & Roberts, M. R. (2013). Validating student score inferences with person-fit statistic and verbal reports: a person-fit study for cognitive diagnostic assessment. Educational Measurement: Issues and Practice, 32(1), 34–42. https://doi.org/10.1111/emip.12003

Cui, Ying, & Li, J. (2015). Evaluating person fit for cognitive diagnostic assessment. Applied Psychological Measurement, 39(3), 223–238. https://doi.org/10.1177/0146621614557272

Dardick, W. R., & Weiss, B. A. (2017). Entropy-based measures for person fit in item response theory. Applied Psychological Measurement, 41(1), 1–18. https://doi.org/10.1177/0146621617698945

Ferrando, P. J. (2015). Assessing person fit in tipical response measures. Handbook of item response theory modeling: Aplications to tipical performance assessment. New York: Routledge.

Ferrando, Pere J. (2012). Assessing inconsistent responding in E and N measures : An application of person-fit analysis in personality. Personality and Individual Differences, 52(6), 718–722. https://doi.org/10.1016/j.paid.2011.12.036

Fox, J.-P., & Marianti, S. (2017). Person-fit statistics for joint models for accuracy and speed. Journal of Educational Measurement, 54(2), 243–262. https://doi.org/10.1111/jedm.12143

Herwin, H. (2019). The application of the generalized lord’s chi-square method in identifying biased items. Jurnal Penelitian dan Evaluasi Pendidikan, 23(1), 57–67. https://doi.org/10.21831/pep.v23i1.20665

Herwin, H, Tenriawaru, A., & Fane, A. (2019). Math elementary school exam analysis based on the Rasch model. Jurnal Prima Edukasia, 7(2), 106–113.

Huang, T. (2012). Aberrance detection powers of the BW and person-fit indices. Educational Technology & Society, 15(1), 28–37.

Hulin, C. L., Drasgow, F., & Parsons, C. K. (1983). Item response theory. Illinois: Dorsey Professional Series.

Kılıçkaya, F. (2019). Assessing L2 vocabulary through multiple-choice, matching, gap-fill, and word formation items. Lublin Studies in Modern Languages and Literature, 43(3), 155–166. https://doi.org/10.17951/lsmll.2019.43.3.155-166

Linden, W. J. van der, & Guo, F. (2008). Bayesian procedures for identifying aberrant response-time patterns in adaptive testing. Psychometrika, 73(3), 365–384.

Mehrzi, R. A. (2011). Comparison among new residual-based person-fit indices and wright’s indices for dichotomous three-parameter IRT model with standardized tests. Journal of Educational and Psychological Studies, 4(2), 14–26. https://doi.org/10.24200/jeps.vol4iss2pp14-26

Meijer, R. R., & Sijtsma, K. (2001). Methodology review: Evaluating person fit. Applied Psychological Measurement, 25(2), 107–135. https://doi.org/10.1177/01466210122031957

Meijer, R. R., & Stoop, V. K. (2001). Person fit across subgroups: An acheivement testing example. Essay on item response theory. New York: Springers.

Miejer, R., Niessen, A., & Tendeiro, J. (2016). A practical guide to check the consistency of item response patterns in clinical research through person-fit statistics: Examples and a computer program. Assessment, 23(1), 52–62. https://doi.org/10.1177/1073191115577800

Mousavi, A. (2019). An examination of different methods of setting cutoff values in person Fit research. International Journal of Testing, 19, 1–22. https://doi.org/10.1080/15305058.2018.1464010

Pan, T., & Yin, Y. (2017). Using the bayes factors to evaluate person fit in the item response theory. Applied Measurement in Education, 30(3), 213–227. https://doi.org/10.1080/08957347.2017.1316275

Reise, S. P. (1990). A comparison of item and person fit methods of assessing model data fit in IRT. Applied Psychological Measurement, 14(2), 127–137. https://doi.org/10.1177/014662169001400202

Rizopoulos, D. (2006). ltm : An R package for latent variable modeling and item response theory analyses. Journal of Statistical Software, 17(5). https://doi.org/10.18637/jss.v017.i05

Santos, K. C. P., Torre, J. de la, & Davier, M. von. (2019). Adjusting person fit index for skewness in cognitive diagnosis modeling. Journal of Classification, 1–22. https://doi.org/10.1007/s00357-019-09325-5

Schmitt, N., Sacco, J. M., Mcfarland, L. A., & Jennings, D. (2015). Correlates of person fit and effect of person fit on test validity. Applied Psychological Measurement, 23(1), 41–53. https://doi.org/10.1177/01466219922031176

Sinharay, S. (2018). A new person-fit statistic for the lognormal model for response times. Journal of Educational Measurement, 55(4), 457–476. https://doi.org/10.1111/jedm.12188

Tendeiro, J. N., Meijer, R. R., & Niessen, A. S. M. (2016). PerFit: An R package for person-fit analysis in IRT. Journal of Statistical Software, 74(5), 1–27. https://doi.org/10.18637/jss.v074.i05

Torre, J. D. L., & Deng, W. (2008). Improving person-fit assessment by correcting the ability estimate and its reference distribution. Journal of Educational Measurement, 45(2), 159–177. https://doi.org/10.1111/j.1745-3984.2008.00058.x

Walker, A. A., & Engelhard, G. J. (2015). Exploring person fit with an approach based on multilevel logistic regression. Applied Measurement in Education, 28(4), 274–291. https://doi.org/10.1080/08957347.2015.1062767

Walker, A. A., Jennings, J. K., & Engelhard, G. (2018). Using person response functions to investigate areas of person misfit related to item characteristics. Educational Assessment, 23(1), 47–68. https://doi.org/10.1080/10627197.2017.1415143

Wind, S. A., & Walker, A. A. (2019). Exploring the correspondence between traditional score resolution methods and person fit indices in rater-mediated writing assessments. Assessing Writing, 39, 25–38. https://doi.org/10.1016/j.asw.2018.12.002

Woods, C. M., Oltmanns, T. F., & Turkheirmer, E. (2008). Detection of aberrant responding on personality scale in a military sample: An aplication of evaluation person fit with two level logistic regression. Psychological Assessment, 20(2), 159–168. https://doi.org/10.1037/1040-3590.20.2.159

Downloads

Published

How to Cite

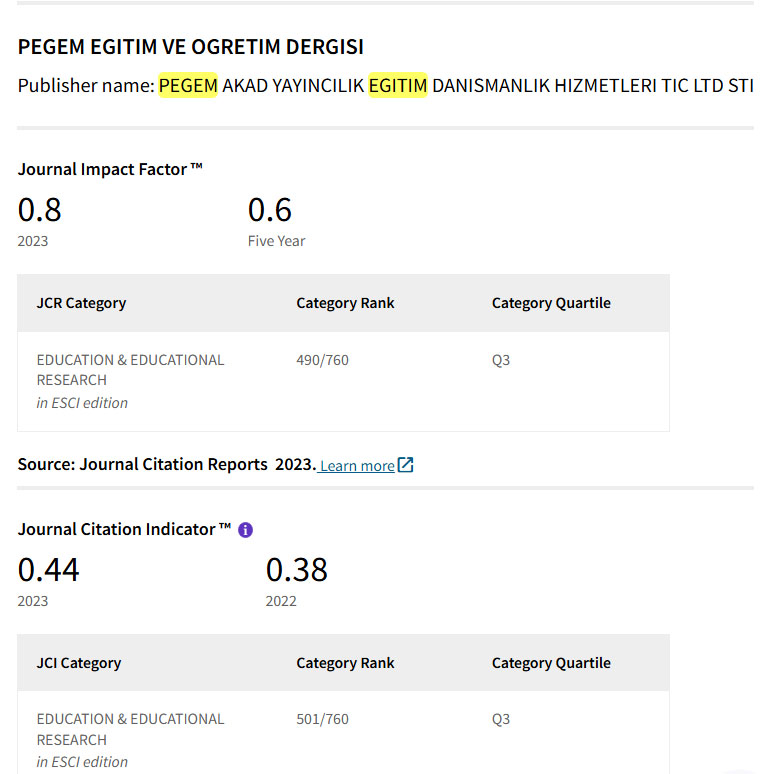

Issue

Section

License

Copyright (c) 2022 Pegem Journal of Education and Instruction

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

NonCommercial — You may not use the material for commercial purposes.

No additional restrictions — You may not apply legal terms or technological measures that legally restrict others from doing anything the license permits.